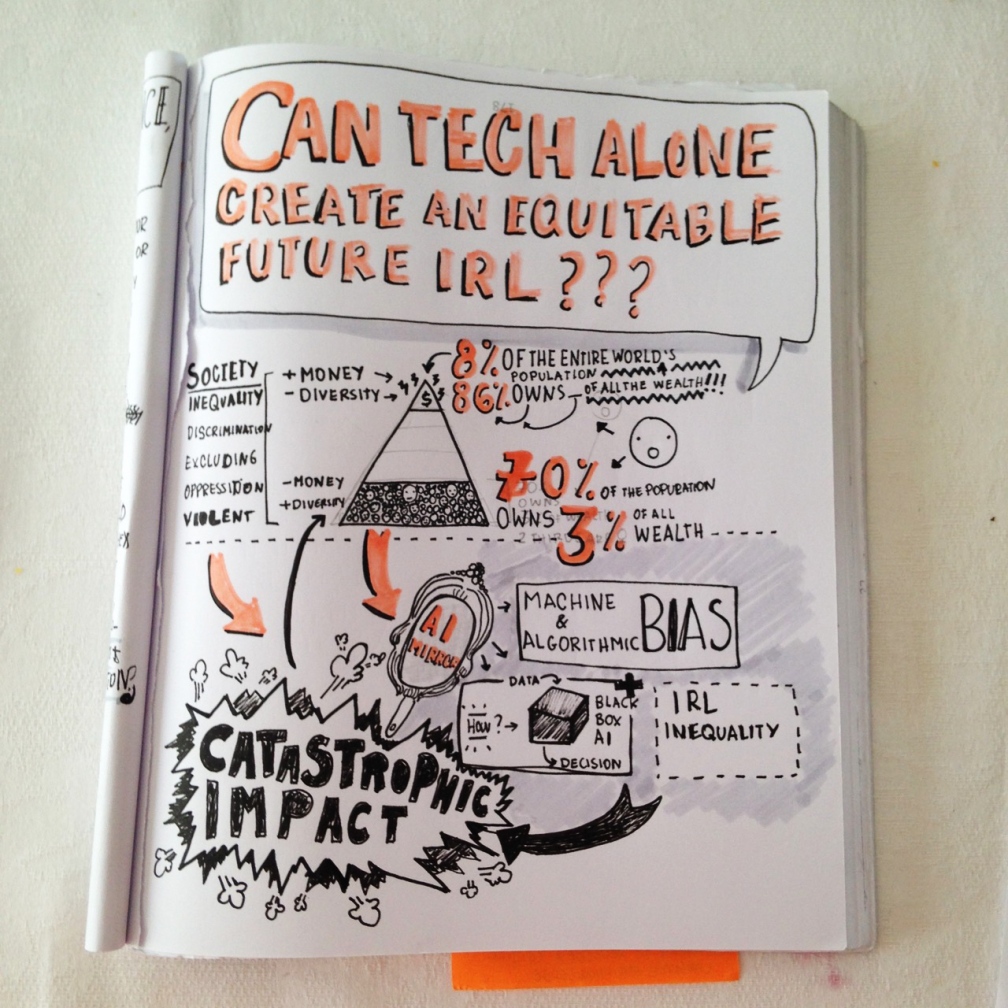

This blog post is a collage from relevant articles, papers etc that critically look at couple of dominant notions around social inequality embodied in Artificial Intelligence (AI):

- Bias

- Decision making

- Outsourcing decision making to algorithms via scientists and engineers

- Black boxing AI

Disclaimer: I am not an expert on AI. I am doing this in the context of a design research project on the Alexa. Take this with a pinch of salt and add your knowledge in the comments.

Artificial Intelligence – an area of computer science that is thriving due to the abundance of data — is focused on enabling computers to perform functions normally associated with human cognitive behaviour.

Source: http://news.itu.int/ai-can-help-to-bridge-the-digital-divide-and-create-an-inclusive-society/

///

Bias

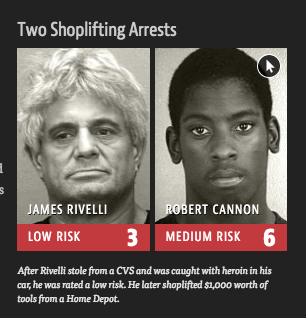

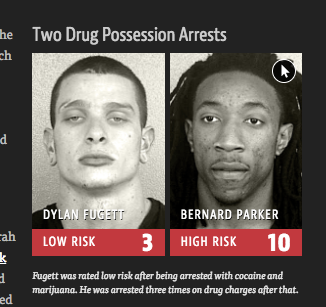

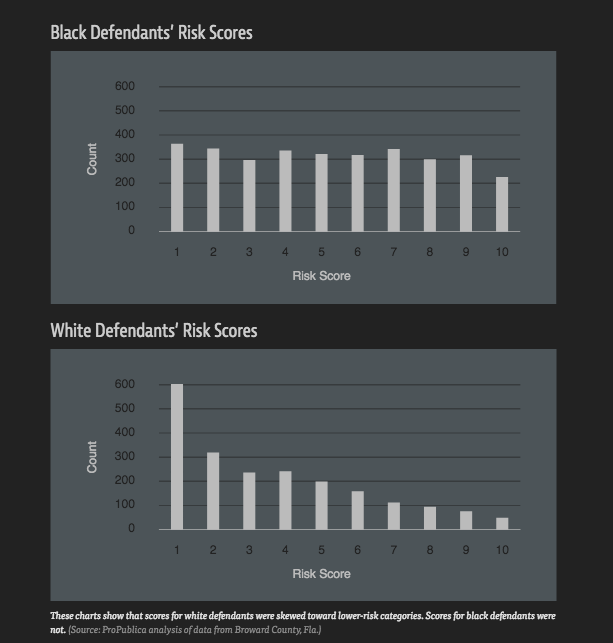

These 4 images come from the ‘Machine Bias’ article from Propublica that looks at an AI-based risk assessment tool used across U.S. courtrooms to inform decisions about who can be set free at every stage of the criminal justice system.

*jaw drops here*

This is a clear example of machine bias:

Said simply machine bias is programming that assumes the prejudice of its creators or data.

Source: https://becominghuman.ai/how-to-prevent-bias-in-machine-learning-fbd9adf1198

Bias can surface in various ways. Sometimes the training data is insufficiently diverse, prompting the software to guess based on what it “knows.” In 2015, Google’s photo software infamously tagged two black users “gorillas” because the data lacked enough examples of people of color.

Even when the data accurately mirrors reality the algorithms still get the answer wrong, incorrectly guessing a particular nurse in a photo or text is female, say, because the data shows fewer men are nurses. In some cases the algorithms are trained to learn from the people using the software and, over time, pick up the biases of the human users.

AI also has a disconcertingly human habit of amplifying stereotypes. Phd students at the University of Virginia and University of Washington examined a public dataset of photos and found that the images of people cooking were 33 percent more likely to picture women than men. When they ran the images through an AI model, the algorithms said women were 68 percent more likely to appear in the cooking photos.

///

Decision making

The concepts of privilege and discrimination are deeply embedded within the structures we live in real life (IRL).

By looking at society and culture through the lenses of technology we can see the IRL inequalities reflected in AI like a mirror, as Virginia Eubanks puts it:

Technology serves as an amplifier of underlying values and social structures.

Source: https://virginia-eubanks.com/2011/04/18/if-technology-is-not-the-answer-what-is/

The space of Artificial Intelligence is mirroring the deep-rooted inequalities, biased beliefs and discrimination (racial, gender, class based) that exist IRL. Why are we copy pasting the shitty-ness of the IRL structures in the digital systems we’re creating?

—

Outsourcing decision making to algorithms via scientists and engineers

All the algorithm has done is move human discretion from the level of the, mostly working class caseworkers to the engineers and scientists who built the model.

Source: Virginia Eubank’s talk ‘Automating Inequality’ https://datasociety.net/events/databite-no-106-automating-inequality-virginia-eubanks-in-conversation-with-alondra-nelson-and-julia-angwin/

Automated decision systems are currently being used by public agencies, reshaping how criminal justice systems work via risk assessment algorithms and predictive policing, optimizing energy use in critical infrastructure through AI-driven resource allocation, and changing our employment and educational systems through automated evaluation tools and matching algorithms.

Source: https://ainowinstitute.org/aiareport2018.pdf

There’s real impact in people’s lives when we couple machine bias and decision making, as very neatly put by Hannah Devlin:

(…) existing social inequalities and prejudices are being reinforced in new and unpredictable ways ways as an increasing number of decisions affecting our everyday lives are ceded to automatons.

And then there’s war and guns:

“…From his own experience in the military serving as a special operations agent, Scharre says he has been in a situation in which an autonomous weapon would have killed a girl that the Taliban was using as a scout, but that soldiers did not target. He says it’s situations like that which highlight differences between what is legal in the laws of war and what is morally right — something that autonomous weapons might not distinguish. “That is one of the concerns that people raise about autonomous weapons is a lack of an ability to feel empathy and to engage in mercy in war. And that if we built these weapons, they would take away a powerful restraint in warfare that humans have.” – Ari Shapiro, Host Learn More on NPR >

Source: Machine learnings https://mailchi.mp/usejournal/ai-war-human-empathy?e=b2b07902df

For more, David Dao compiled a great list called Awful AI: https://github.com/daviddao/awful-ai

—

Black boxing AI

Flaws in most AI systems aren’t easy to fix, in large part because they are black boxes: the data goes in and the answer comes out without any explanation for the decision. Compounding the issue is that the most advanced systems are jealously guarded by the firms that create them. This not only poses challenge in determining where bias creeps in, it makes it impossible for the person denied parole or the teacher labeled a low-performer to appeal, because they have no way of understanding how a decision was reached.

Source: https://www.fastcompany.com/40536485/now-is-the-time-to-act-to-stop-bias-in-ai

The real risk with AI isn’t malice but competence. A superintelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble.

Quoting Stephen Hawking on Reddit(…) Right now, the real danger in the world of artificial intelligence isn’t the threat of robot overlords—it’s a startling lack of diversity.

Source: https://qz.com/531257/inside-the-surprisingly-sexist-world-of-artificial-intelligence/

Lack of diversity plays a big role in creating and perpetuating these inequalities. Tabitha Goldstaub gives a few examples relevant to the issues around gender:

“Men and women have different symptoms when having a heart attack — imagine if you trained an AI to only recognize male symptoms,” she says. “You’d have half the population dying from heart attacks unnecessarily.” It’s happened before: crash test dummies for cars were designed after men; female drivers were 47% more likely to be seriously hurt in accidents. Regulators only started to require car makers to use dummies based on female bodies in 2011.

Source: https://www.teenvogue.com/story/artificial-intelligence-isnt-good-for-women-but-we-can-fix-it

Another example where a lack of diversity connects a gender stereotype and uses the same logic to justify neglect over the complexities of decision making:

Our culture perpetuates the idea that women are somehow mysterious and unpredictable and hard to understand,” says Marie desJardins, a professor of computer science at the University of Maryland, Baltimore County whose research focuses on machine learning and intelligent decision-making. “I think that’s nonsense. People are hard to understand.

Source: https://qz.com/531257/inside-the-surprisingly-sexist-world-of-artificial-intelligence/

As Virginia Eubanks puts it, decision making systems using algorithms see human decision making as something we can’t understand, thus using that same stamp of ‘mysterious and unpredictable’ that justifies putting it in a black box and hoping for the best. #fail

To finish, some excerpts from an excellent paper on ‘Algorithmic Bias in Autonomous Systems’ that also talk about notions of statistical and moral deviation:

The possibility of algorithmic bias is particularly worrisome for autonomous or semi-autonomous systems, as these need not involve a human “in the loop” (either active or passive) who can detect and compensate for biases in the algorithm or model. In fact, as systems become more complicated and their workings more inscrutable to users, it may become increasingly difficult to understand how autonomous systems arrive at their decisions.

(…)

For example, many professions exhibit gender disparities, as in aerospace engineering (7.8% women) or speech & language pathology (97% women) [https://www.bls.gov/cps/cpsaat11.htm]. These professions clearly exhibit statistical biases relative to the overall population, as there are deviations from the population-level statistics. Such statistical biases are often used as proxies to identify moral biases; in this case, the underrepresentation of women in aerospace engineering may raise questions about unobserved, morally problematic structures working to the disadvantage of women in this area. Similarly, the over-representation of women in speech and language pathology may represent a moral bias,

(…)

Moreover, these issues often cannot be resolved in a purely technological manner, as they involve value-laden questions such as what the distribution of employment opportunities ought to be (independently of what it actually empirically is), and what factors ought and ought not to influence a person’s employment prospects.

Moreover, this type of algorithmic bias (again, whether statistical, moral, legal, or other) can be quite subtle or hidden, as developers often do not publicly disclose the precise data used for training the autonomous system. If we only see the final learned model or its behavior, then we might not even be aware, while using the algorithm for its intended purpose, that biased data were used.

(…)

That is, there might be cases in which we deliberately use biased training data, thereby yielding algorithmic bias relative to a statistical standard, precisely so the system will be algorithmically unbiased relative to a moral standard.

Source: https://www.cmu.edu/dietrich/philosophy/docs/london/IJCAI17-AlgorithmicBias-Distrib.pdf

—

In summary the high level point is that the lack of diversity in the tech industry leads to dominant (and generally) oppressive discourses as they come from a single unchallenged privileged perspective, that mirror and perpetuate the inequalities IRL structures.

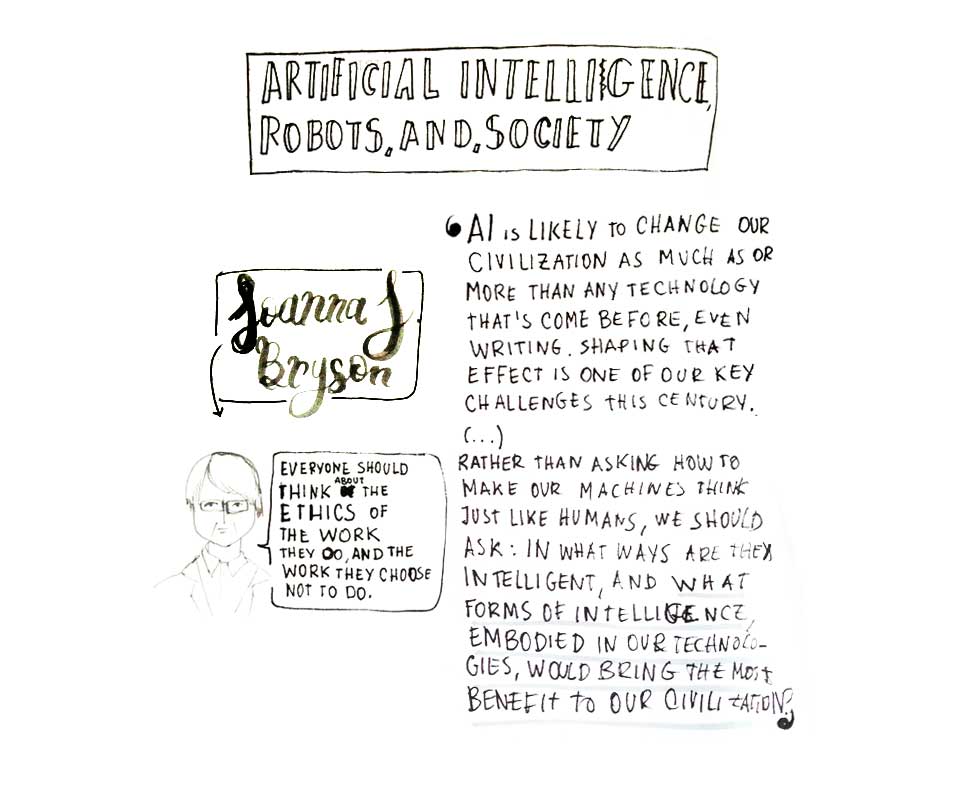

To end on a more positive note, we could frame future engagements with AI by focusing on what forms of intelligence would bring the most benefit to us:

Rather than asking how to make our machines think just like humans, we should ask: In what ways are they intelligent, and what forms of intelligence, embodied in our technologies, would bring the most benefit to our civilization?

Source: ‘Why Watson is Real Artificial Intelligence’ by Miles Brundage and Joanna Bryson http://www.slate.com/blogs/future_tense/2014/02/14/watson_is_real_artificial_intelligence_despite_claims_to_the_contrary.html

Resources:

- https://ada-ai.org/

- http://www.fast.ai/

- http://ai-4-all.org/

- https://www.forbes.com/sites/mariyayao/2017/05/18/meet-20-incredible-women-advancing-a-i-research/#446bc63026f9

- https://blog.openai.com/preparing-for-malicious-uses-of-ai/

- FATE—for Fairness, Accountability, Transparency and Ethics in AI, Microsoft Corp.

- Awful AI is a curated list to track current scary usages of AI – hoping to raise awareness to its misuses in society https://github.com/daviddao/awful-ai

“Before releasing an AI system, companies should run rigorous pre-release trials to ensure that they will not amplify biases and errors due to any issues with the training data, algorithms, or other elements of system design. ” https://medium.com/@AINowInstitute/the-10-top-recommendations-for-the-ai-field-in-2017-b3253624a7

LikeLike